Scraping Telegram

The crawler, DB deployment and setup for data analysis

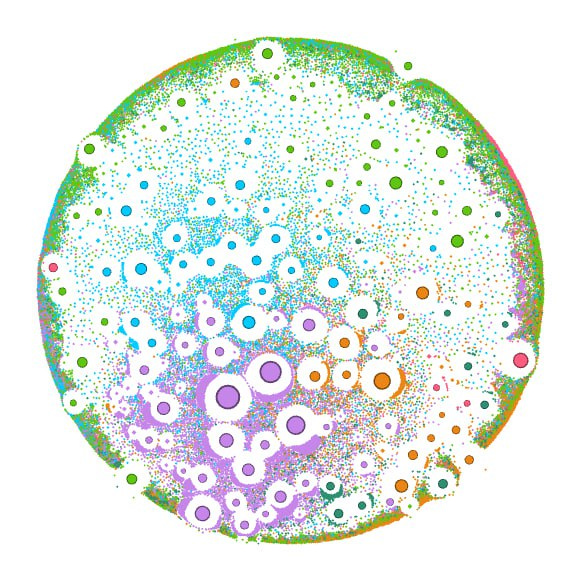

From my previous post, you may know that I have scraped a huge graph of Telegram channel reposts and mentions and some of the things I could learn from the data. Here I’ll tell you about the technical stuff of my processes and work environment. In the next articles, I’ll tell more about large graph analysis.

How does my spider work

It has eight legs.

As a start, I’ve found a scraper that can collect one channel by its ID. The scraper is not perfect, but it’s fast and does not require login. My main goal was to collect the graph of reposts and mentions, so I collected only post texts and dates, and it also parsed links from posts. For this scraper, the repost looks the same as any link, so I just checked the field outlinks and saved every link that contains t.me.

if hasattr(item, "outlinks"):

for link in item.outlinks:

if "t.me" in link:

channels.append(

(

name,

link,

link.split("/")[3].split("?")[0],

item.url,

)

)Not only internal links could contain substring t.me but it’s better to collect some garbage and clear it after that to make the filter too strict.

So that’s the way we collect a list of neighbors for each channel and after that, we can add them to the scraping queue. The only thing that remains is to remember which are already collected.

It’s BFS, so the queue grows super fast. It became an important question of how to rank the queue. My friend made a simulation on the collected at that moment data and found that exploration goes faster if we rank channels by the number of incoming links. I used this method for some time, but it looked like the process was stuck in large clusters. Now I sample 10 random channels, collect them, and repeat to be sure that it has a chance to explore wider.

The source code is here.

How do I store the data

TL;DR: I started from SQLite and now host and maintain Postgres in Docker.

I’m a noob at DB administration, DevOps, DataOps, and all the other …Ops things. So that is the story of pain.

Initially, I had no plan to scale the project. I only wanted to collect some part of the graph once and then go to the fun part with data analysis. It was already painful to store data in files since I had to update it and query simultaneously. So I started with SQLite + SQLalchemy. DB then grew to ~60GB, which was mostly ok, but until I realized that I needed to move my scraper to the remote machine. It was too hard to sync SQLite DB between machines so I started to look for serious DB.

I had some experience with Postgresql (deployed and maintained by somebody else) and everybody including my friends said “Just use Postgres for everything”. So I found a cheap enough provider for DB as a service and moved all my data there. I just read SQLite through Python script with pandas + SQLite and pushed all the tables to Postgres.

That was a good solution for a fast start, but scaling appeared to be too expensive. So I bought a VPS and ran Postgres in Docker.

It’s relatively easy and all the manuals are easily available, but there are some caveats I wish I knew before starting.

Configuration. The default Postgres config is not sane, but it’s not obvious how to choose good parameters. So there are some config generators like this

There is also an unobvious thing not mentioned in manuals that you need to add

listen_addresses = '*'to config to make DB available from outside.Postgres expect a large enough shared memory for some queries. By default, Docker gives only 64Mb to the container, which is insufficient. The error message from Postgres is too vague to figure it out. So add this parameter when starting the container `

--shm-size=1G`If Postgres eats all the disk space it’s an almost hopeless situation. It’s not possible even to clean the DB, backup it, or do anything that could resolve the problem. To avoid this create a placeholder file filled with zeros for several GB. In case of a full disk, you can delete this file and rescue the DB.

So the steps to start your Postgres in docker are like this (assuming you already installed docker):

Make a directory for DB storage Docker volume. It can help to move to another machine or mount it to another container.

mkdir ~/postgres_vol/data/Make a 4GB placeholder file

sudo dd if=/dev/zero of=/home/user/placeholder bs=1M count=4000 Create a config file and paste the parameters from points 1 and 2 from the previous list.

nano ~/postgresql.conf

# insert parameters from https://pgtune.leopard.in.ua/#/

# insert `listen_addresses = '*'`

# insert one empty line in the end (just in case)

# ctrl + o to save

# ctrl + x to exit editorNow we are ready to start the container

docker run -d \

--name postgres \

--shm-size=1G \

-p 5432:5432 \

-e POSTGRES_PASSWORD=MyCoo1Pa55w0rd \

-v /home/user/postgresql.conf:/etc/postgresql/postgresql.conf \

-v /home/user/postgres_vol/data:/var/lib/postgresql/data \

postgres:15 -c config_file=/etc/postgresql/postgresql.confThen you need to create the user and DB

docker exec -it postgres bash

# change user to postgres

su postgres

# run postgres shell

psql

# create user named user

CREATE ROLE user LOGIN password 'MyCoo1Pa55w0rd';

# create db

CREATE DATABASE tg ENCODING 'UTF8' OWNER username;

# exit psql

\qNow restart the container docker restart postgres and it’s ready.

I created the tables just with df.to_sql() from Pandas. To speed up some queries I also added indexes and constraints to reject duplicates on insertion.

Other infrastructure

The project works on three machines. The first is for Postgres and all the above is about it. The second is a weak cheap machine for scraper. Nothing special, just Python env and script running in the tmux session. The third is a big powerful machine for data analysis. It runs Jupyter server and does some additional work like backing up everything.

Jupyter server

I’ve got some recipes to create a good environment for ML. First of all, it’s Conda with Mamba. Mamba is much better when handling dependencies and much faster. And Conda makes life easier than with vanilla Python Venv when it comes to some cases like installing Numpy with MKL, certain versions of Cuda, and so on.

I always have at least two Conda environments. One for people and another for monsters. I keep Jupyter in a separate env so I don’t have to reinstall it if something breaks in the env I use for work.

So here is my basic recipe after installing Mamba:

# Create base env for everything

mamba create -n mamba310 python=3.10 numpy scipy pandas scikit-learn jupyterlab ipykernel ipywidgets tqdm sqlalchemy pyarrow psycopg2 -c conda-forge

# Create env for jupyter only

mamba create -n jupenv python=3.10 jupyterlab ipywidgets -c conda-forge

# Cteate separate env for NLP libs because they often break everything

mamba create -n nlpenv python=3.10 numpy scipy pandas ipykernel ipywidgets jupyterlab tqdm -c conda-forge

# Create jupyter kernels for envs above

python -m ipykernel install --name py310_mamba --display-name "Mamba py3.10" --user

python -m ipykernel install --name nlp --display-name "NLP py3.10" --userNow there will be Jupyter and it will display two available kernels.

Backups

Anything can happen, so if something goes wrong I want to have a way to proceed with my work from where I stopped as fast as possible. So I back up everything.

I’ve made three simple scripts to back up:

Database

Code

Datasets

Let’s start with DB

cd /home/username/data/

mv base_backup.sql base_backup.sql.bkp

pg_dump -h xxx.xxx.xxx.xxx -p 5432 -U username dbname -w > base_backup.sqlThis script runs by cron job every weekend. It moves the previous backup to another file and saves a new one. So at every point, I have two last backups. But there it is worth remembering that if DB is out of order, this script will not fail but create an empty backup. So if something goes wrong rename the last backup or remove the cron job.

Datasets

I use Restic and S3 storage.

export AWS_ACCESS_KEY_ID=

export AWS_SECRET_ACCESS_KEY=

restic -r s3:https://s3.fr-par.scw.cloud/backups/data --verbose backup ~/data --password-file /home/username/resticpassRestic does incremental backups so it allows to roll back to a certain point in time. I save DB backups to the same directory where my datasets are, so I can restore them too.

Code

That is pretty easy. Just init git repo in the directory where the code is, then add and push it to the remote repository by schedule.

# REPO_URL="git@github.com:myusername/mybackup.git"

DIR_TO_BACKUP="/home/username/notebooks"

# Change into the directory to backup

cd $DIR_TO_BACKUP

# Add all files to the next commit

git add .

# Commit the changes with a timestamp

TIMESTAMP=$(date +%Y%m%d_%H%M%S)

git commit -m "Auto backup at $TIMESTAMP"

# Push the changes to the remote repository

git push origin masterAnd everything together in cron

0 2 * * * sh /home/username/scripts/databackup.sh > /home/username/cronlogs/databackup.log 2>&1

30 2 * * * sh /home/username/scripts/notebooksbackup.sh > /home/username/cronlogs/codebackup.log 2>&1

0 0 * * 6 sh /home/username/scripts/backup_base.sh > /home/username/cronlogs/basebackup.log 2&1In next series

Next time I’ll tell you more about how to handle large networks, and how to analyze and visualize them.